Question: Stochastic gradient descent (SGD) is a simple but widely applicable optimization technique. For example, we can use it to train a Support Vector Machine! The

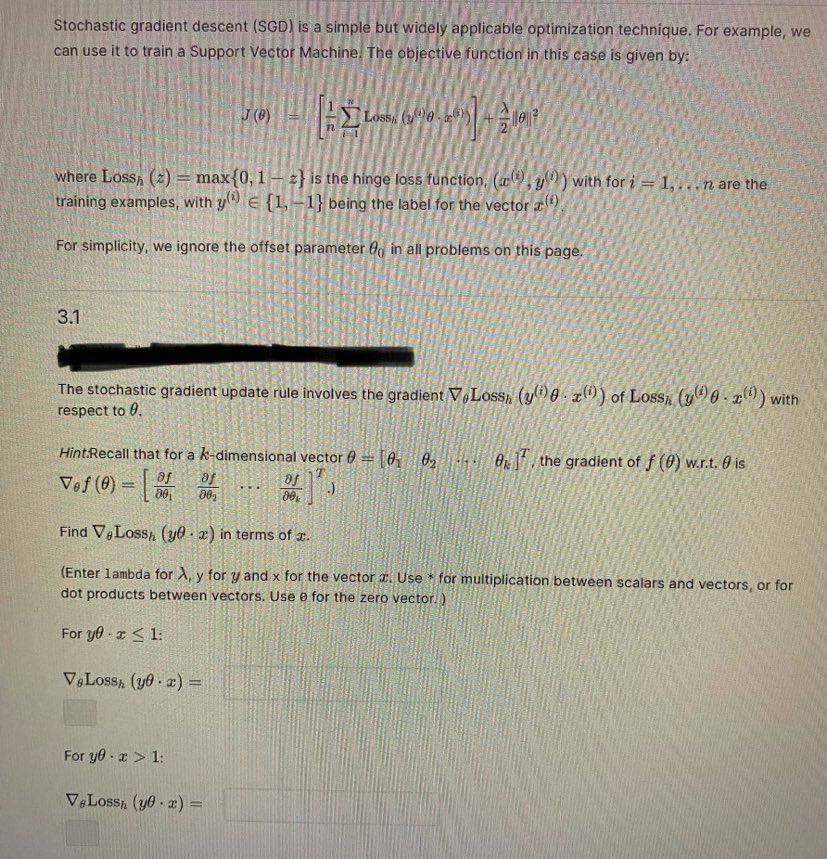

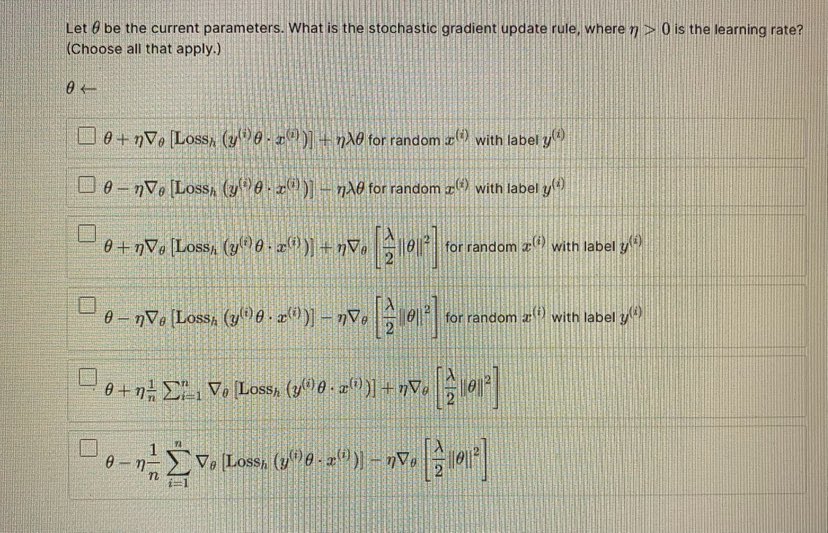

Stochastic gradient descent (SGD) is a simple but widely applicable optimization technique. For example, we can use it to train a Support Vector Machine! The objective function in this case is given by: where Loss, (z) = max {0, 1 - z} is the hinge loss function, (), y" ) with for i = 1, . . . n are the training examples, with y E {1, -1} being the label for the vector a (). For simplicity, we ignore the offset parameter Go in all problems on this page. 3.1 The stochastic gradient update rule involves the gradient V,Loss, (ye . x(") ) of Loss, (1 0) 0 . 2(!) ) with respect to 0. Hint Recall that for a -dimensional vector 0 = [01 02 . .|0: ] . the gradient of f (0) w.r.t. 0 is Vof ( 0 ) - 30 Find V:Lossh (y0 . ) in terms of I. (Enter lambda for ), y for y and x for the vector c. Use * for multiplication between scalars and vectors, or for dot products between vectors. Use e for the zero vector.) For y0 . x 5 1: VoLossh (y0 . x) = For yo . x > 1: = (:T . Ofi) USSOTB ALet & be the current parameters. What is the stochastic gradient update rule, where > >> 0 is the learning rate? Choose all that apply.) We + nVe Loss, (y : 2() + ne for random I ) with label y.") e - nVo Loss, (y)e . >() )Ae for random ) with label y" 8 + nVe Loss, (y)0 . z() )|+ nVe ale|for random a with label y 0 - nVe Loss, (y ?)0 . :() )]- mVe| |je|17|for random a with label y 0 + n- > Vo Lossh (y) 8 : 2() )| + 78 5012 0 - n

Step by Step Solution

There are 3 Steps involved in it

Get step-by-step solutions from verified subject matter experts