Question: Stochastic gradient descent ( SGD ) is a simple but widely applicable optimization technique. For example, we can use it to train a Support Vector

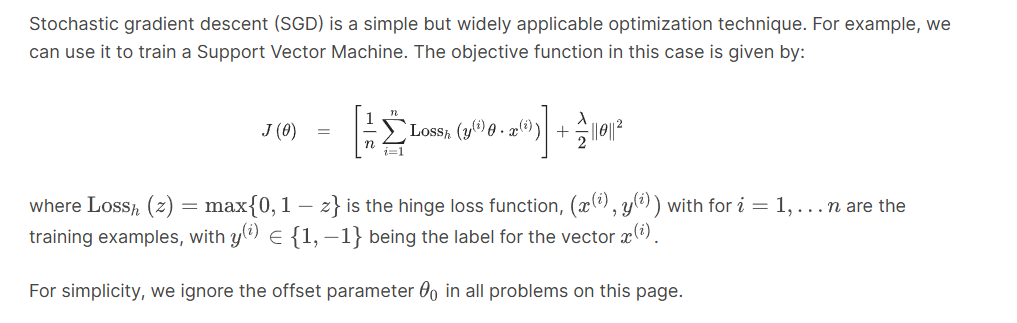

Stochastic gradient descent SGD is a simple but widely applicable optimization technique. For example, we

can use it to train a Support Vector Machine. The objective function in this case is given by:

where max is the hinge loss function, with for dotsn are the

training examples, with being the label for the vector

For simplicity, we ignore the offset parameter in all problems on this page.

Step by Step Solution

There are 3 Steps involved in it

1 Expert Approved Answer

Step: 1 Unlock

Question Has Been Solved by an Expert!

Get step-by-step solutions from verified subject matter experts

Step: 2 Unlock

Step: 3 Unlock