Question: task3 The MIP is difficult to solve for large-scale problems with m > 1. As a remedy, we shall approximate the objective function in Task

task3

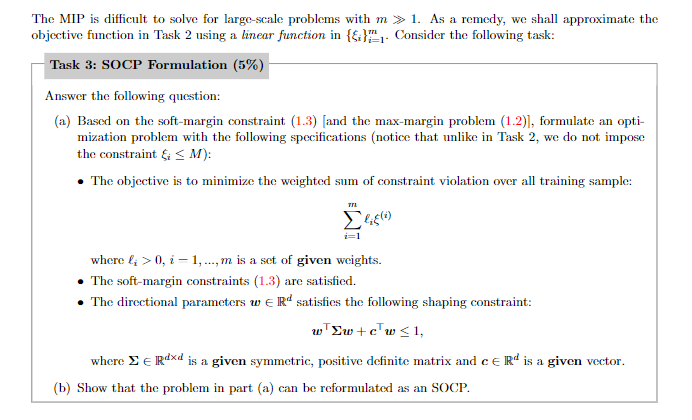

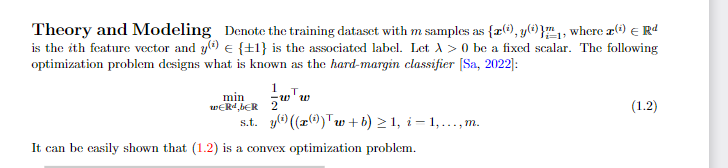

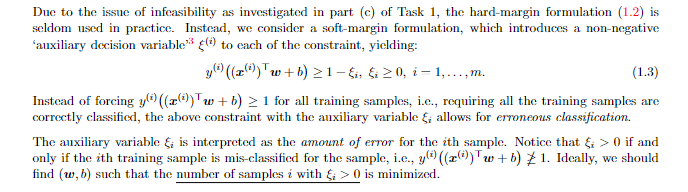

The MIP is difficult to solve for large-scale problems with m > 1. As a remedy, we shall approximate the objective function in Task 2 using a linear function in {e}.1. Consider the following task: Task 3: SOCP Formulation (5%) Answer the following question: (a) Based on the soft-margin constraint (1.3) and the max-margin problem (1-2)], formulate an opti- mization problem with the following specifications (notice that unlike in Task 2, we do not impose the constraint & 0, i = 1, ..., m is a sct of given weights. The soft-margin constraints (1.3) are satisfied. The directional parameters werd satisfies the following shaping constraint: w wEw+c'w 0 be a fixed scalar. The following optimization problem designs what is known as the hard-margin classifier (Sa, 2022]: a 1 T W'w (1.2) min WERLER 24 s.t. y(((z))"w+b) > 1, 1-1,..., m. It can be easily shown that (1.2) is a convex optimization problem. T Due to the issue of infeasibility as investigated in part (c) of Task 1, the hard-margin formulation (1.2) is seldom used in practice. Instead, we consider a soft-margin formulation, which introduces a non-negative auxiliary decision variable cli) to cach of the constraint, yielding: y ()"w+b) > 1 -&i, &i 20, 1,..., m. (1.3) Instead of forcing yl (2))"w+b) > 1 for all training samples, i.e., requiring all the training samples are correctly classified, the above constraint with the auxiliary variable & allows for erroneous classification. The auxiliary variable & is interpreted as the amount of error for the ith sample. Notice that & > 0 if and only if the ith training sample is mis-classified for the sample, i.c., y" ((z)Tw+b) 1. Ideally, we should find (w,b) such that the number of samples i with ti > 0 is minimized. +