Question: This is a question about machine learning neural network. Consider the following network, where 2: denotes output units, 3; denotes hidden units, and .3 denotes

This is a question about machine learning neural network.

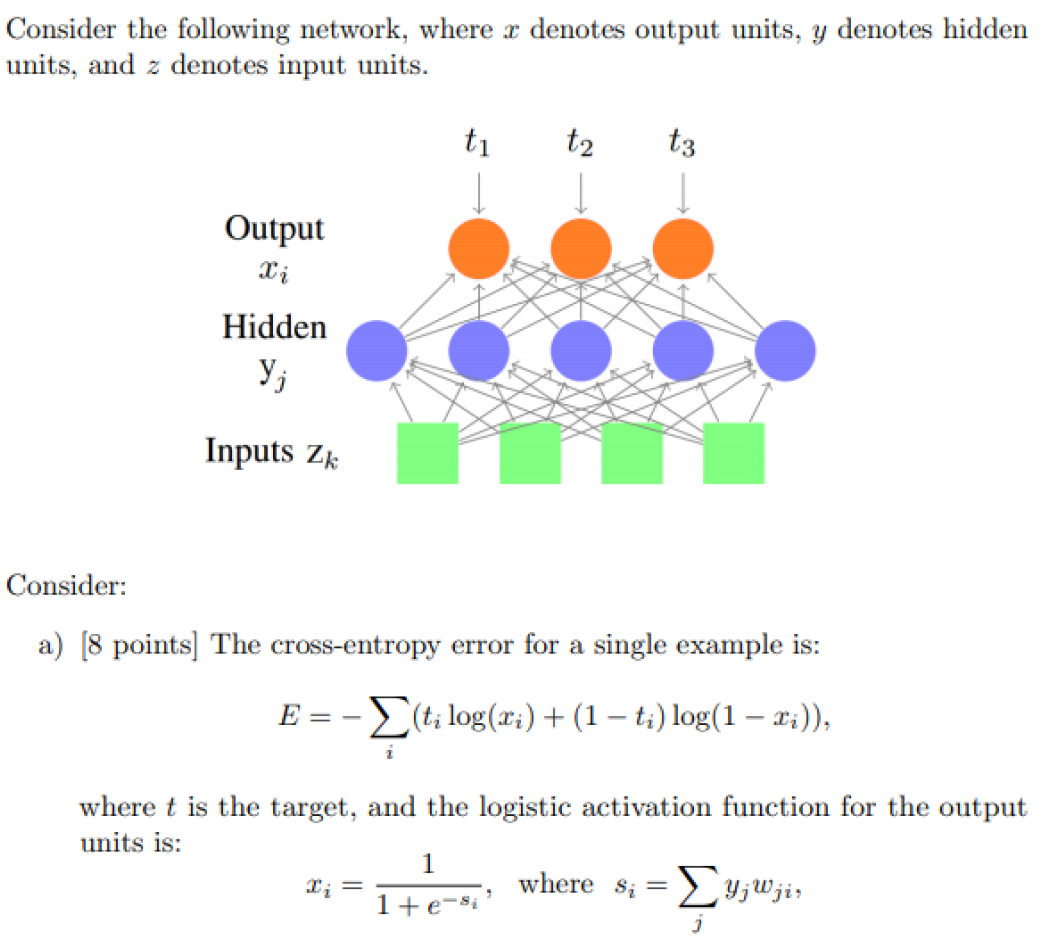

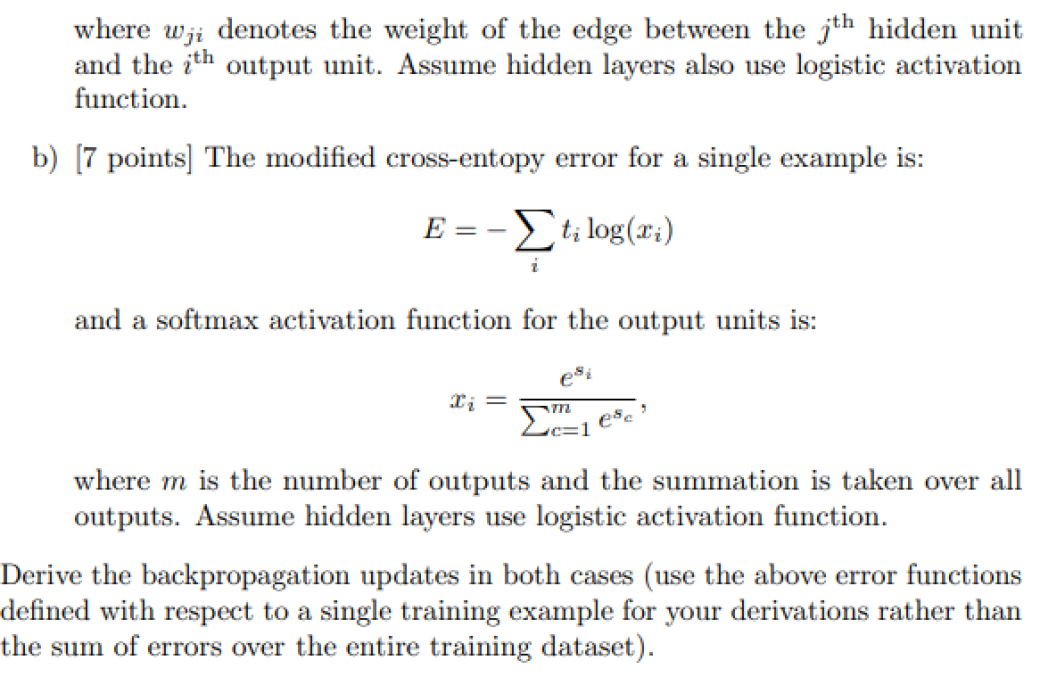

Consider the following network, where 2: denotes output units, 3; denotes hidden units, and .3 denotes input units. Consider: a.) [8 points] The crosspentropy error for a single example is: E = - thi 108(11) + (1 - fillgu - 1%)), where t is the target, and the logistic activation function for the output units is: where wig denotes the weight of the edge between the 3"\" hidden unit and the 1"\" output unit. Assume hidden layers also use logistic activation function. h) [7 points] The modied erossentopy error for a single example is: E = Z t; 10g(17.1) and a softmax activation function for the output units is: e" = 2'\" where m is the number of outputs and the summation is taken over all outputs. Assume hidden layers use logistic activation function. In Derive the backpropagation updates in both cases (use the above error functions dened with respect to a single training example for your derivations rather than the sum of errors over the entire training datsset)

Step by Step Solution

There are 3 Steps involved in it

Get step-by-step solutions from verified subject matter experts