Question: We have learnt KNN for classification. In fact, KNN can also be used for regression tasks. For a pair data ( x i , y

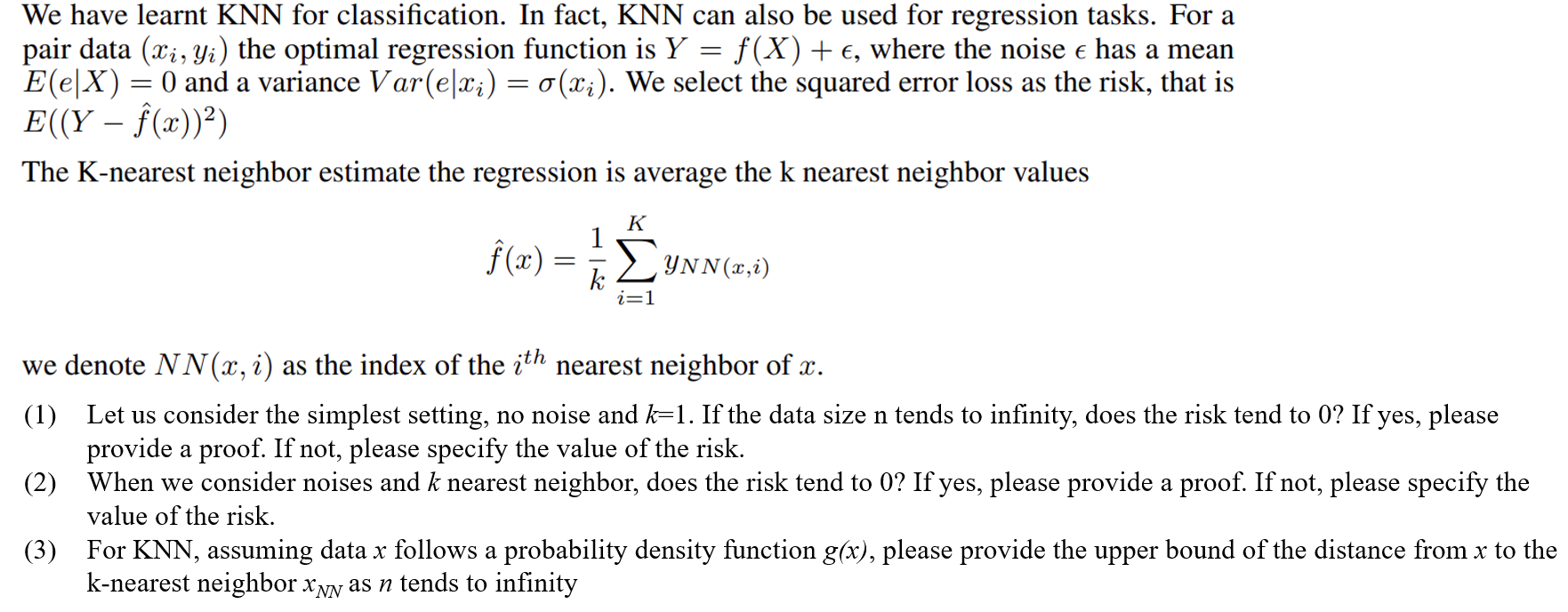

We have learnt KNN for classification. In fact, KNN can also be used for regression tasks. For a

pair data the optimal regression function is where the noise has a mean

and a variance Var We select the squared error loss as the risk, that is

The Knearest neighbor estimate the regression is average the nearest neighbor values

hat

we denote as the index of the nearest neighbor of

Let us consider the simplest setting, no noise and If the data size tends to infinity, does the risk tend to If yes, please

provide a proof. If not, please specify the value of the risk.

When we consider noises and nearest neighbor, does the risk tend to If yes, please provide a proof. If not, please specify the

value of the risk.

For KNN assuming data follows a probability density function please provide the upper bound of the distance from to the

knearest neighbor as tends to infinity

Step by Step Solution

There are 3 Steps involved in it

1 Expert Approved Answer

Step: 1 Unlock

Question Has Been Solved by an Expert!

Get step-by-step solutions from verified subject matter experts

Step: 2 Unlock

Step: 3 Unlock