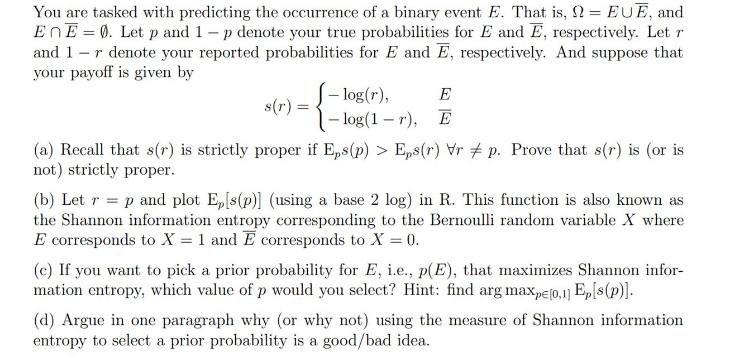

Question: You are tasked with predicting the occurrence of a binary event E. That is, = EUE, and ENE 0. Let p and 1-p denote

You are tasked with predicting the occurrence of a binary event E. That is, = EUE, and ENE 0. Let p and 1-p denote your true probabilities for E and E, respectively. Let r and 1-r denote your reported probabilities for E and E, respectively. And suppose that your payoff is given by s(r) = E -log(r), - log(1r), E (a) Recall that s(r) is strictly proper if Eps(p) > Eps(r) Vr p. Prove that s(r) is (or is not) strictly proper. (b) Let r = p and plot E,[s(p)] (using a base 2 log) in R. This function is also known as the Shannon information entropy corresponding to the Bernoulli random variable X where E corresponds to X = 1 and E corresponds to X = 0. (c) If you want to pick a prior probability for E, i.e., p(E), that maximizes Shannon infor- mation entropy, which value of p would you select? Hint: find arg maxpe[0,1] Ep[s(p)]. (d) Argue in one paragraph why (or why not) using the measure of Shannon information entropy to select a prior probability is a good/bad idea.

Step by Step Solution

There are 3 Steps involved in it

Get step-by-step solutions from verified subject matter experts