Question: You need to code the project in Python language. Jupyter notebook should be used and a single code file with .ipynb extension should be passed.

You need to code the project in Python language. Jupyter notebook should be used and a single code file with .ipynb extension should be passed. If your computer's processing capacity is not sufficient, it is recommended to use Google Colab.

Classifying News Texts

The aim is to detect real and fake news content shared through social media posts. In the dataset, "target" indicates whether the news is real or fake, while "score" represents the truth/fakeness score of the news. This score is a confidence score of how real the news is. 5 is the highest score and indicates that the news is definitely true. 1 is the lowest score. While a score of 1 indicates that the news is definitely fake, a score of 3 indicates that there is no full opinion on whether the news is fake or real. It means 50 percent fake and 50 percent real.

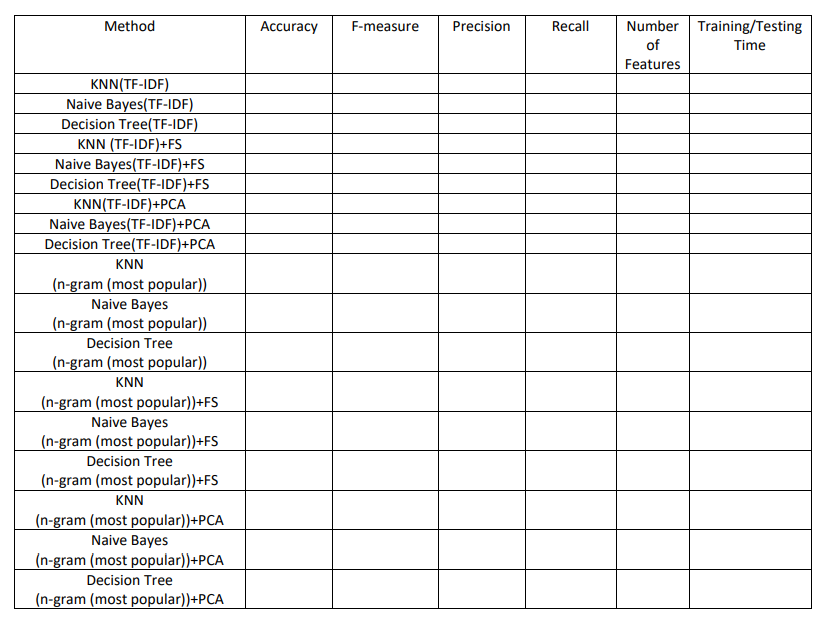

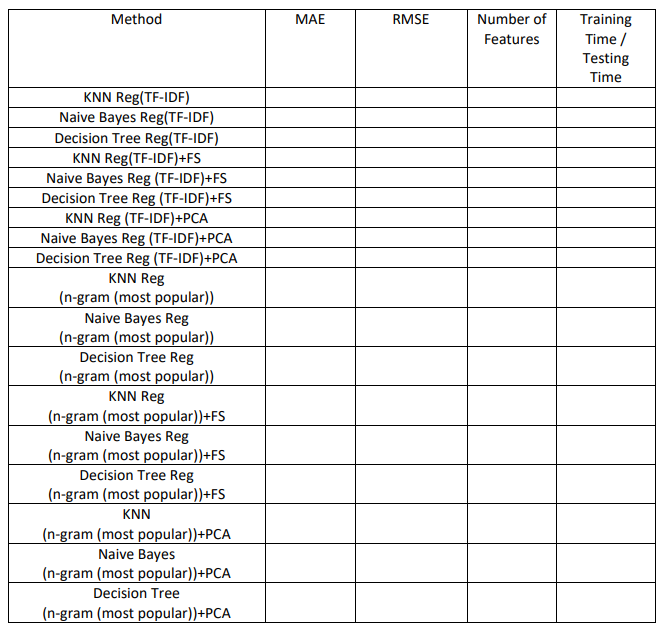

*In the project, KNN, Decision Tree, Nave Bayes and SVM classification algorithms will be used. At this stage, binary classification will be applied. *Linear Regression, KNN Regression and Decision Tree Regression will be used in the project. This In this section, "score" prediction will be made. * In the project, text pre-processing (stemming, stop) is used to obtain vectors of text data. words etc.) techniques, TF-IDF, n-gram approaches (most popular) will be used. * In the project, feature selection and feature extraction techniques will be used. any for FS You can choose the algorithm. * Training and test sets should be adjusted by applying 5-fold cross validation. final fertility The results must be the average of the 5-fold results. * According to the results of classification and regression algorithms, the following tables are must be filled. *Parameter adjustment should be made for the classification and regression-based algorithms used. and the good results obtained should be added to the table.

Table 1: Classification Results

Table 2: Regression Results

\begin{tabular}{|c|c|c|c|c|c|c|} \hline Method & Accuracy & F-measure & Precision & Recall & \begin{tabular}{c} Number \\ of \\ Features \end{tabular} & \begin{tabular}{l} Training/Testing \\ Time \end{tabular} \\ \hline \multicolumn{7}{|l|}{ KNN(TF-IDF) } \\ \hline \multicolumn{7}{|l|}{ Naive Bayes(TF-IDF) } \\ \hline \multicolumn{7}{|l|}{ Decision Tree(TF-IDF) } \\ \hline \multicolumn{7}{|l|}{ KNN (TF-IDF)+FS } \\ \hline \multicolumn{7}{|l|}{ Naive Bayes(TF-IDF)+FS } \\ \hline \multicolumn{7}{|l|}{ Decision Tree(TF-IDF)+FS } \\ \hline \multicolumn{7}{|l|}{ KNN(TF-IDF)+PCA } \\ \hline \multicolumn{7}{|l|}{ Naive Bayes(TF-IDF)+PCA } \\ \hline \multicolumn{7}{|l|}{ Decision Tree(TF-IDF)+PCA } \\ \hline \multicolumn{7}{|l|}{\begin{tabular}{c} KNN \\ (n-gram (most popular)) \\ \end{tabular}} \\ \hline \multicolumn{7}{|l|}{\begin{tabular}{c} Naive Bayes \\ (n-gram (most popular)) \end{tabular}} \\ \hline \multicolumn{7}{|l|}{\begin{tabular}{c} Decision Tree \\ (n-gram (most popular)) \\ \end{tabular}} \\ \hline \multicolumn{7}{|l|}{\begin{tabular}{c} KNN \\ (n-gram (most popular))+FS \\ \end{tabular}} \\ \hline \multicolumn{7}{|l|}{\begin{tabular}{c} Naive Bayes \\ (n-gram (most popular))+FS \\ \end{tabular}} \\ \hline \multicolumn{7}{|l|}{\begin{tabular}{c} Decision Tree \\ (n-gram (most popular))+FS \end{tabular}} \\ \hline \multicolumn{7}{|l|}{\begin{tabular}{c} KNN \\ (n-gram (most popular))+PCA \end{tabular}} \\ \hline \multicolumn{7}{|l|}{\begin{tabular}{c} Naive Bayes \\ (n-gram (most popular))+PCA \end{tabular}} \\ \hline \begin{tabular}{c} Decision Tree \\ (n-gram (most popular))+PCA \end{tabular} & & & & & & \\ \hline \end{tabular} \begin{tabular}{|c|c|c|c|c|} \hline Method & MAE & RMSE & \begin{tabular}{l} Number of \\ Features \end{tabular} & \begin{tabular}{c} Training \\ Time / \\ Testing \\ Time \end{tabular} \\ \hline \multicolumn{5}{|l|}{ KNN Reg(TF-IDF) } \\ \hline \multicolumn{5}{|l|}{ Naive Bayes Reg(TF-IDF) } \\ \hline \multicolumn{5}{|l|}{ Decision Tree Reg(TF-IDF) } \\ \hline \multicolumn{5}{|l|}{ KNN Reg(TF-IDF)+FS } \\ \hline \multicolumn{5}{|l|}{ Naive Bayes Reg (TF-IDF)+FS } \\ \hline \multicolumn{5}{|l|}{ Decision Tree Reg (TF-IDF)+FS } \\ \hline \multicolumn{5}{|l|}{ KNN Reg (TF-IDF)+PCA } \\ \hline \multicolumn{5}{|l|}{ Naive Bayes Reg (TF-IDF)+PCA } \\ \hline \multicolumn{5}{|l|}{ Decision Tree Reg (TF-IDF)+PCA } \\ \hline \multicolumn{5}{|l|}{\begin{tabular}{c} KNN Reg \\ (n-gram (most popular)) \\ \end{tabular}} \\ \hline \multicolumn{5}{|l|}{\begin{tabular}{c} Naive Bayes Reg \\ (n-gram (most popular)) \end{tabular}} \\ \hline \multicolumn{5}{|l|}{\begin{tabular}{c} Decision Tree Reg \\ (n-gram (most popular)) \\ \end{tabular}} \\ \hline \multicolumn{5}{|l|}{\begin{tabular}{c} KNN Reg \\ (n-gram (most popular))+FS \end{tabular}} \\ \hline \multicolumn{5}{|l|}{\begin{tabular}{c} Naive Bayes Reg \\ (n-gram (most popular))+FS \end{tabular}} \\ \hline \multicolumn{5}{|l|}{\begin{tabular}{c} Decision Tree Reg \\ (n-gram (most popular))+FS \end{tabular}} \\ \hline \multicolumn{5}{|l|}{\begin{tabular}{c} KNN \\ (n-gram (most popular))+PCA \end{tabular}} \\ \hline \multicolumn{5}{|l|}{\begin{tabular}{c} Naive Bayes \\ (n-gram (most popular))+PCA \end{tabular}} \\ \hline \begin{tabular}{c} Decision Tree \\ (n-gram (most popular))+PCA \end{tabular} & & & & \\ \hline \end{tabular}

Step by Step Solution

There are 3 Steps involved in it

Get step-by-step solutions from verified subject matter experts