Chip multiprocessors (CMPs) have multiple cores and their caches on a single chip. CMP on-chip L2 cache

Question:

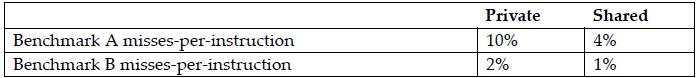

Chip multiprocessors (CMPs) have multiple cores and their caches on a single chip. CMP on-chip L2 cache design has interesting trade-offs. The following table shows the miss rates and hit latencies for two benchmarks with private vs. shared L2 cache designs. Assume L1 cache has a 3% miss rate and a 1-cycle access time.

Assume the following hit latencies:

![]()

1. Which cache design is better for each of these benchmarks? Use data to support your conclusion.

2. Off-chip bandwidth becomes the bottleneck as the number of CMP cores increases. How does this bottleneck affect private and shared cache systems differently? Choose the best design if the latency of the first off-chip link doubles.

3. Discuss the pros and cons of shared vs. private L2 caches for both single-threaded, multi-threaded, and multiprogrammed workloads, and reconsider them if having on-chip L3 caches.

4. Would a non-blocking L2 cache produce more improvement on a CMP with a shared L2 cache or with a private L2 cache? Why?

5. Assume new generations of processors double the number of cores every 18 months. To maintain the same level of per-core performance, how much more off-chip memory bandwidth is needed for a processor released in three years?

6. Consider the entire memory hierarchy. What kinds of optimizations can improve the number of concurrent misses?

Step by Step Answer:

Computer Organization And Design MIPS Edition The Hardware/Software Interface

ISBN: 9780128201091

6th Edition

Authors: David A. Patterson, John L. Hennessy