Question: Suppose we have three positive examples x 1 = ( 1 , 0 , 0 ) , x 2 = ( 0 , 0 ,

Suppose we have three positive examples x1=(1,0,0),x2=(0,0,1)x1=(1,0,0),x2=(0,0,1) and x3=(0,1,0)x3=(0,1,0) and three negative examples x4=(−1,0,0),x5=(0,−1,0)x4=(−1,0,0),x5=(0,−1,0) and x6=(0,0,−1)x6=(0,0,−1). Apply standard gradient ascent method to train a logistic regression classifier (without regularization terms).

Initialize the weight vector with two different values and set w00=0w00=0 (e.g. w0=(0,0,0,0)′,w0= (0,0,1,0)′)(0,0,1,0)′). Would the final weight vector (w∗)(w∗) be the same for the two different initial values? What are the values? Please explain your answer in detail. You may assume the learning rate to be a positive real constant ηη.

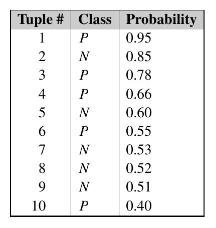

Tuple # Class Probability 1 P N P P N 23456780 8 9 10 P N N N P 0.95 0.85 0.78 0.66 0.60 0.55 0.53 0.52 0.51 0.40

Step by Step Solution

3.34 Rating (160 Votes )

There are 3 Steps involved in it

With a positive learning rate n we ... View full answer

Get step-by-step solutions from verified subject matter experts