Question: Let A = (a ij ) R n,n , b R n , with a ii 0 for every i = 1,

Let A = (aij) ∈ Rn,n, b ∈ Rn, with aii ≠ 0 for every i = 1, . . . , n. The Jacobi method for solving the square linear system Ax = b consists in decomposing A as a sum: A = D + R, where D = diag (a11, . . . , ann), and R contains the off-diagonal elements of A, and then applying the recursion

![]()

with initial point![]()

The method is part of a class of methods known as matrix splitting, where A is decomposed as a sum of a “simple,” invertible matrix and another matrix; the Jacobi method uses a particular splitting of A.

1. Find conditions on D, R that guarantee convergence from an arbitrary initial point.

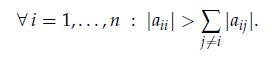

2. The matrix A is said to be strictly row diagonally dominant if

Show that when A is strictly row diagonally dominant, the Jacobi method converges.

x(k+1) = D(b - Rx(k)), k = 0,1,2,...,

Step by Step Solution

3.42 Rating (152 Votes )

There are 3 Steps involved in it

1 Assume that M D 1 R is diagonalizable M V 1 EV with E a diagona... View full answer

Get step-by-step solutions from verified subject matter experts