Question: 3. ANSWER EITHER THIS QUESTION OR QUESTION 2. Language. Imagine a language learner trying to infer a simple grammar from the language spoken around her.

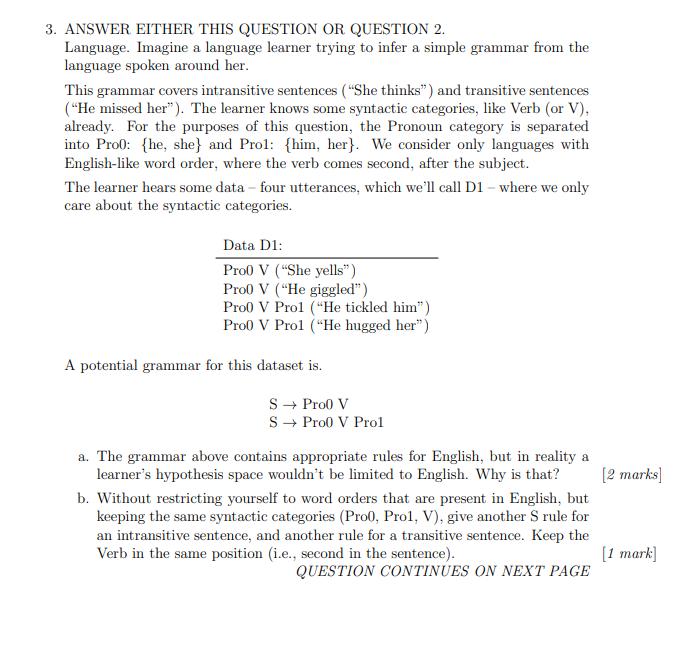

3. ANSWER EITHER THIS QUESTION OR QUESTION 2. Language. Imagine a language learner trying to infer a simple grammar from the language spoken around her. This grammar covers intransitive sentences ("She thinks) and transitive sentences ("He missed her). The learner knows some syntactic categories, like Verb (or V), already. For the purposes of this question, the Pronoun category is separated into Pro0: {he, she} and Prol: {him, her}. We consider only languages with English-like word order, where the verb comes second, after the subject. The learner hears some data - four utterances, which we'll call D1 - where we only care about the syntactic categories. Data D1: Pro0 V ("She yells) Pro0 V ("He giggled") Pro0 V Prol ("He tickled him") Pro0 V Prol ("He hugged her") A potential grammar for this dataset is. S + Pro0 V S + Pro0 V Prol [2 marks] a. The grammar above contains appropriate rules for English, but in reality a learner's hypothesis space wouldn't be limited to English. Why is that? b. Without restricting yourself to word orders that are present in English, but keeping the same syntactic categories (Pro0, Prol, V), give another S rule for an intransitive sentence, and another rule for a transitive sentence. Keep the Verb in the same position (i.e., second in the sentence). QUESTION CONTINUES ON NEXT PAGE [1 mark] QUESTION CONTINUED FROM PREVIOUS PAGE [3 marks] a [3 marks] [3 marks) c. Conjugate priors. i. If we want a Bayesian model of the different probabilities of S rules given the data, we must define a likelihood function with parameters and a prior. What is a simple likelihood function that we could use, if we want to use a conjugate prior? What is the conjugate prior? ii. Under the prior and likelihood you have chosen, what is the posterior probability of e given D1? Assume that all S rules are equally likely, a priori. If your prior has a hyperparameter, call it a; your answer can contain a. Make sure you consider the full hypothesis space (all rules with V second). If you can describe it in terms of known distributions and parameters, do that you do not need to give mathematical expressions for probability functions. iii. What is the effect of the prior? What happens if a is very large, or very small? If you do not have an answer for (i) or (ii), pick any like- lihood function with a conjugate prior and discuss what happens when the hyperparameters take extreme values d. The learner now hears data from another parent who is a speaker of an erga- tive language. In ergative languages, the "subject" of intransitive sentences looks like the "object" of transitive sentences: D2: Pro0 V ("She coughed") Pro0 V ("He sneezed") Prol V Prol ("Him blessed she) e. If the learner merges the two datasets together, what's the predictive posterior probability of (Prol V ProO) given the two datasets? Explain briefly. You may give your answer in terms of a conjugate prior and/or integrals. f. If we want our learner to assign appropriate probabilities to syntactic rules, what is problematic about merging the two datasets together? How could these problems be avoided; how would the model need to be changed? g. Describe a way to expand our simple model into a hierarchical one that would enable the learner to capture the fact that she is hearing two different lan- guages. Be explicit about any prior distributions you would use, and use plate notation if appropriate. h. In the expanded model, what do the highest levels capture about the common characteristics of both languages? How will this influence predictions about other languages the learner might hear in the future? [2 marks] [4 marks] [4 marks] [3 marks] 3. ANSWER EITHER THIS QUESTION OR QUESTION 2. Language. Imagine a language learner trying to infer a simple grammar from the language spoken around her. This grammar covers intransitive sentences ("She thinks) and transitive sentences ("He missed her). The learner knows some syntactic categories, like Verb (or V), already. For the purposes of this question, the Pronoun category is separated into Pro0: {he, she} and Prol: {him, her}. We consider only languages with English-like word order, where the verb comes second, after the subject. The learner hears some data - four utterances, which we'll call D1 - where we only care about the syntactic categories. Data D1: Pro0 V ("She yells) Pro0 V ("He giggled") Pro0 V Prol ("He tickled him") Pro0 V Prol ("He hugged her") A potential grammar for this dataset is. S + Pro0 V S + Pro0 V Prol [2 marks] a. The grammar above contains appropriate rules for English, but in reality a learner's hypothesis space wouldn't be limited to English. Why is that? b. Without restricting yourself to word orders that are present in English, but keeping the same syntactic categories (Pro0, Prol, V), give another S rule for an intransitive sentence, and another rule for a transitive sentence. Keep the Verb in the same position (i.e., second in the sentence). QUESTION CONTINUES ON NEXT PAGE [1 mark] QUESTION CONTINUED FROM PREVIOUS PAGE [3 marks] a [3 marks] [3 marks) c. Conjugate priors. i. If we want a Bayesian model of the different probabilities of S rules given the data, we must define a likelihood function with parameters and a prior. What is a simple likelihood function that we could use, if we want to use a conjugate prior? What is the conjugate prior? ii. Under the prior and likelihood you have chosen, what is the posterior probability of e given D1? Assume that all S rules are equally likely, a priori. If your prior has a hyperparameter, call it a; your answer can contain a. Make sure you consider the full hypothesis space (all rules with V second). If you can describe it in terms of known distributions and parameters, do that you do not need to give mathematical expressions for probability functions. iii. What is the effect of the prior? What happens if a is very large, or very small? If you do not have an answer for (i) or (ii), pick any like- lihood function with a conjugate prior and discuss what happens when the hyperparameters take extreme values d. The learner now hears data from another parent who is a speaker of an erga- tive language. In ergative languages, the "subject" of intransitive sentences looks like the "object" of transitive sentences: D2: Pro0 V ("She coughed") Pro0 V ("He sneezed") Prol V Prol ("Him blessed she) e. If the learner merges the two datasets together, what's the predictive posterior probability of (Prol V ProO) given the two datasets? Explain briefly. You may give your answer in terms of a conjugate prior and/or integrals. f. If we want our learner to assign appropriate probabilities to syntactic rules, what is problematic about merging the two datasets together? How could these problems be avoided; how would the model need to be changed? g. Describe a way to expand our simple model into a hierarchical one that would enable the learner to capture the fact that she is hearing two different lan- guages. Be explicit about any prior distributions you would use, and use plate notation if appropriate. h. In the expanded model, what do the highest levels capture about the common characteristics of both languages? How will this influence predictions about other languages the learner might hear in the future? [2 marks] [4 marks] [4 marks] [3 marks]

Step by Step Solution

There are 3 Steps involved in it

Get step-by-step solutions from verified subject matter experts