Question: Consider a multi-layer perceptron (MLP) as shown in Fig. 1. This neural network has a special structure. There are only two neurons in the

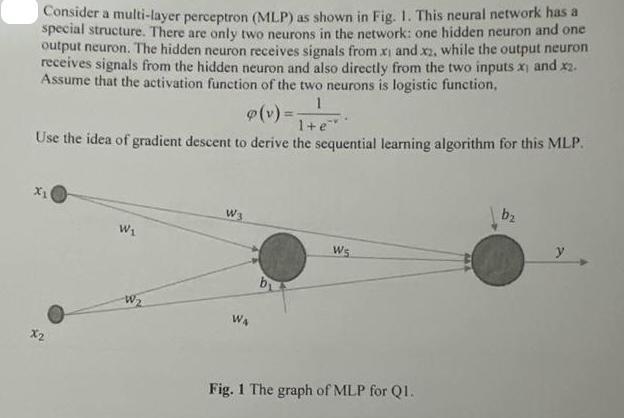

Consider a multi-layer perceptron (MLP) as shown in Fig. 1. This neural network has a special structure. There are only two neurons in the network: one hidden neuron and one output neuron. The hidden neuron receives signals from xi and x2, while the output neuron receives signals from the hidden neuron and also directly from the two inputs x and x2. Assume that the activation function of the two neurons is logistic function, (v)= 1 1+e Use the idea of gradient descent to derive the sequential learning algorithm for this MLP. X1 Wa Ws W1 x2 Fig. 1 The graph of MLP for Q1. bz

Step by Step Solution

There are 3 Steps involved in it

Get step-by-step solutions from verified subject matter experts