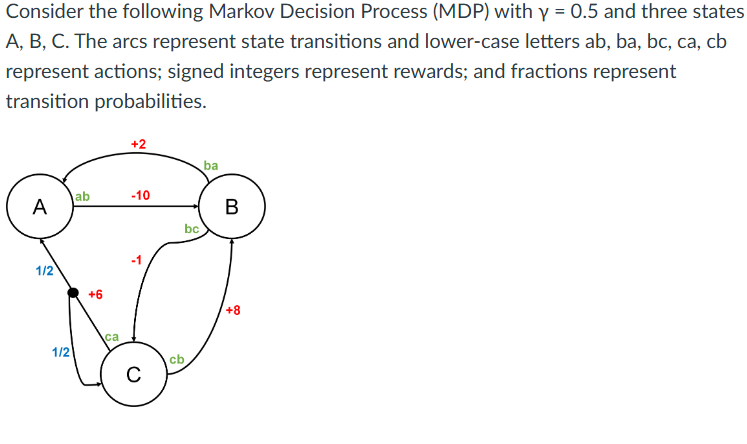

Question: Consider the following Markov Decision Process ( MDP ) with = 0 . 5 and three states A , B , C . The arcs

Consider the following Markov Decision Process MDP with and three states A B C The arcs represent state transitions and lowercase letters ab ba bc ca cb represent actions; signed integers represent rewards; and fractions represent transition probabilities.

Write down the Bellman expectation equation for statevalue functions

Consider the uniform random policy sa that takes all actions from state s with equal probability. Starting with an initial value function of VAVBVC apply one synchronous iteration of iterative policy evaluation ie one backup for each state to compute a new value function Vsie VA VB and VC by applyingexpanding the equation given in part

Write the Bellman function that characterizes the optimal state value function ie Vs Consider the following Markov Decision Process MDP with gamma and three states A B C The arcs represent state transitions and lowercase letters ab ba bc ca cb represent actions; signed integers represent rewards; and fractions represent transition probabilities.

Step by Step Solution

There are 3 Steps involved in it

1 Expert Approved Answer

Step: 1 Unlock

Question Has Been Solved by an Expert!

Get step-by-step solutions from verified subject matter experts

Step: 2 Unlock

Step: 3 Unlock