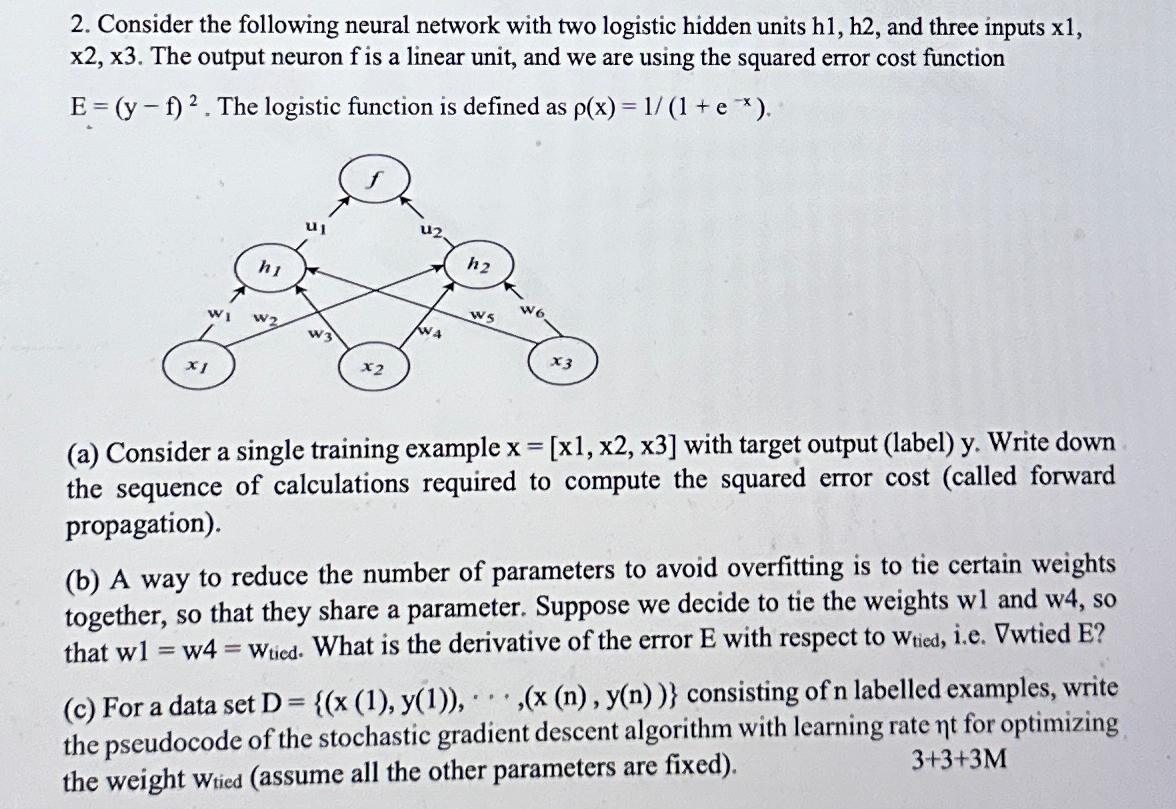

Question: Consider the following neural network with two logistic hidden units h 1 , h 2 , and three inputs x 1 , x 2 ,

Consider the following neural network with two logistic hidden units and three inputs The output neuron is a linear unit, and we are using the squared error cost function The logistic function is defined as

a Consider a single training example with target output label Write down the sequence of calculations required to compute the squared error cost called forward propagation

b A way to reduce the number of parameters to avoid overfitting is to tie certain weights together, so that they share a parameter. Suppose we decide to tie the weights wl and w so that What is the derivative of the error with respect to ie Dwtied E

c For a data set cdots, consisting of labelled examples, write the pseudocode of the stochastic gradient descent algorithm with learning rate for optimizing the weight wtied assume all the other parameters are fixed

PLEASE WRITE THE COMPLETE CALCULATED SOLUTION ONLY

Step by Step Solution

There are 3 Steps involved in it

1 Expert Approved Answer

Step: 1 Unlock

Question Has Been Solved by an Expert!

Get step-by-step solutions from verified subject matter experts

Step: 2 Unlock

Step: 3 Unlock