Question: Getting this error when trying to run model not sure what is wrong pls help In [ ]: # Task 3 def forward_backward(X, Y, w,

![is wrong pls help In [ ]: # Task 3 def forward_backward(X,](https://dsd5zvtm8ll6.cloudfront.net/si.experts.images/questions/2024/09/66f5392224456_97766f539219711b.jpg)

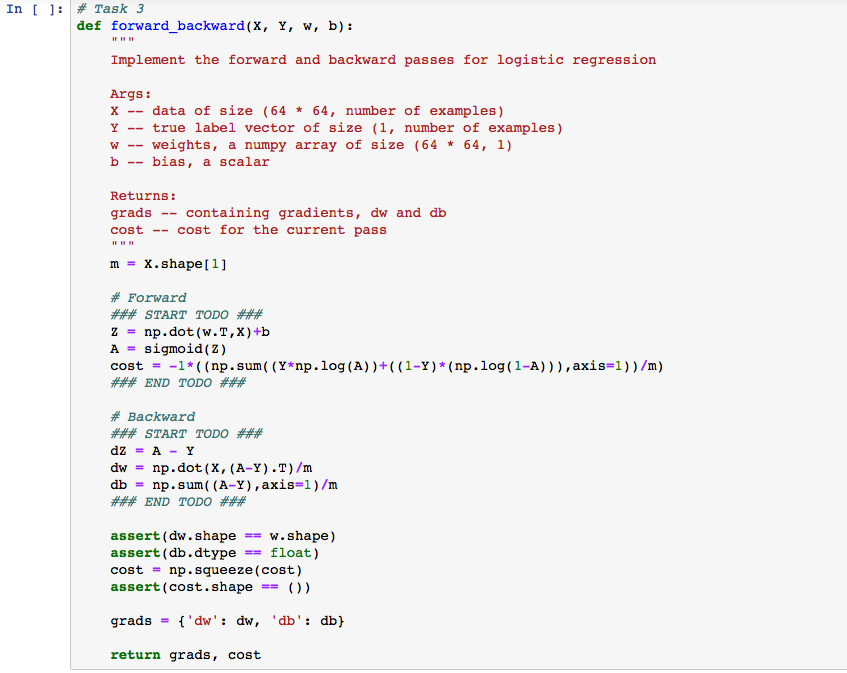

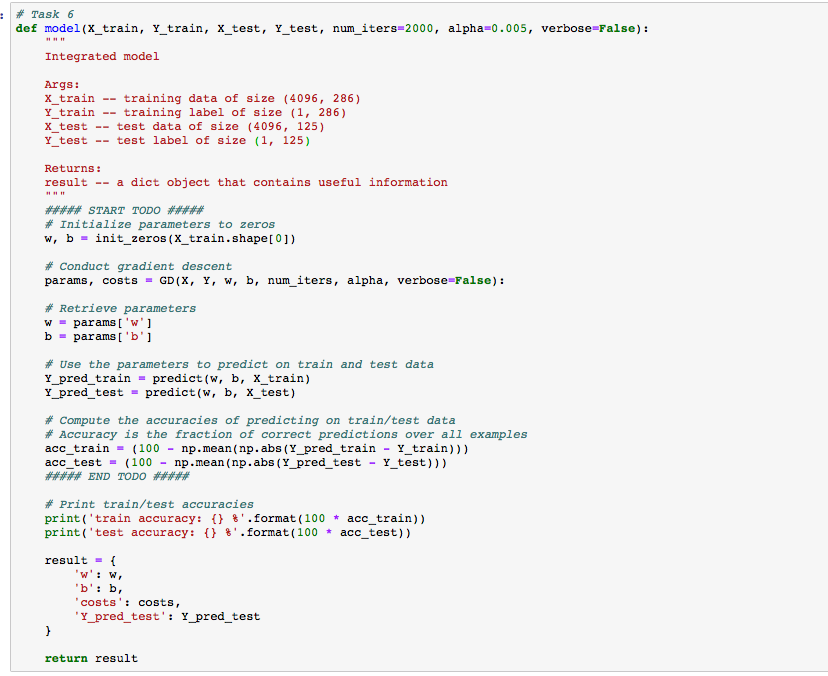

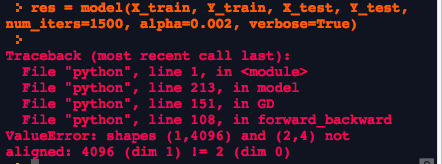

Getting this error when trying to run "model" not sure what is wrong pls help

In [ ]: # Task 3 def forward_backward(X, Y, w, b): Implement the forward and backward passes for logistic regression Args: X -- data of size (64 * 64, number of examples) Y-- true label vector of size (1, number of examples) w weights, a numpy array of size (64 *64, 1) b -- bias, a scalar Returns: grads -- containing gradients, dw and db cost - cost for the current pass m = X. shape [1] Forward ## START TODO ### Znp.dot (w.T, X)+b A- sigmoid(Z) cost-1*((np.sum( (Y np.log (A))+((1-Y)*(np.log(1-A))), axis-1))/m) ## END TODO ### # Backward ## START TODO ### dw = np . dot ( X, (A-Y)T)/m db = np. sum((A-Y) , axis-1) /m ## END TODO ### assert (dw.shape .shape) assert (db.dtypefloat) costnp.squeeze (cost) assert (cost.shape)) return grads, cost In [ ]: # Task 3 def forward_backward(X, Y, w, b): Implement the forward and backward passes for logistic regression Args: X -- data of size (64 * 64, number of examples) Y-- true label vector of size (1, number of examples) w weights, a numpy array of size (64 *64, 1) b -- bias, a scalar Returns: grads -- containing gradients, dw and db cost - cost for the current pass m = X. shape [1] Forward ## START TODO ### Znp.dot (w.T, X)+b A- sigmoid(Z) cost-1*((np.sum( (Y np.log (A))+((1-Y)*(np.log(1-A))), axis-1))/m) ## END TODO ### # Backward ## START TODO ### dw = np . dot ( X, (A-Y)T)/m db = np. sum((A-Y) , axis-1) /m ## END TODO ### assert (dw.shape .shape) assert (db.dtypefloat) costnp.squeeze (cost) assert (cost.shape)) return grads, cost

Step by Step Solution

There are 3 Steps involved in it

Get step-by-step solutions from verified subject matter experts