Question: Please write a MATLAB code for the Algorithm 1 EM (noiseless case) below This is a link to the entire paper if you need it

Please write a MATLAB code for the Algorithm 1 EM (noiseless case) below

This is a link to the entire paper if you need it https://arxiv.org/pdf/1310.3745.pdf

Do this for iteration 1 to 500.

line 3 is saying J1 and J2 are 2 empty sets

Use randn(300,5) if possible as some matrix

X is assumed to be multivariate normal

line 5 down is saying, if the inequality is true then put i into J1.

Please let me know if there are questions.

Thank you

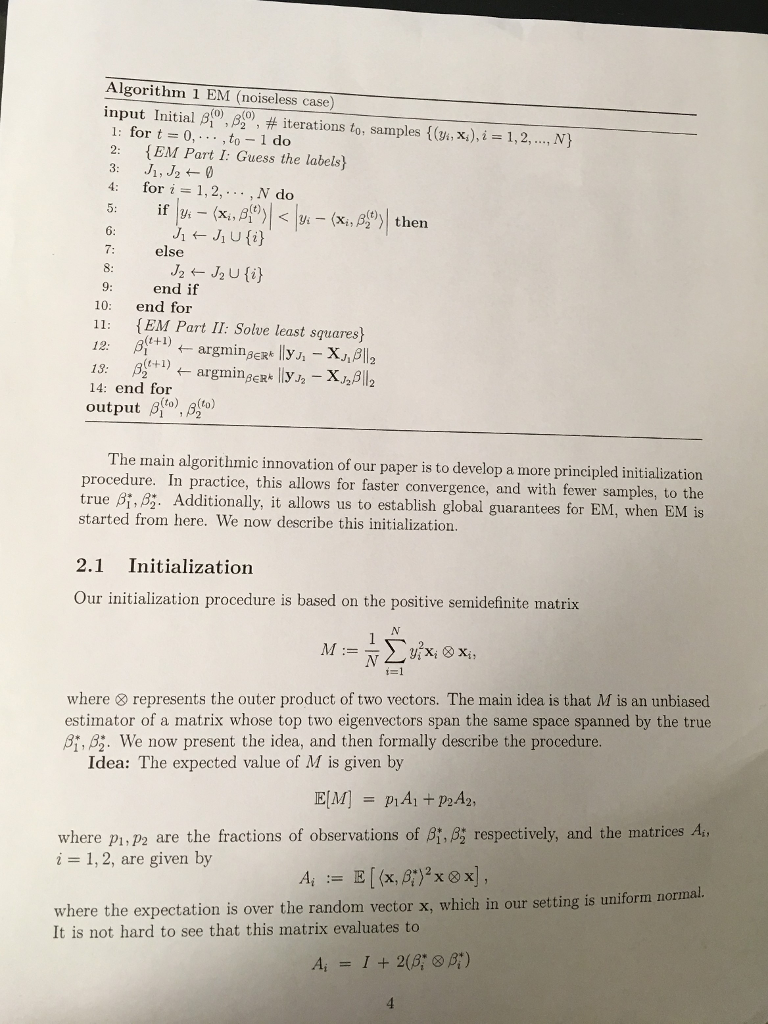

Algorithm 1 EM (noiseless case) input initial 0), i for t-to. . . . , to -1 do 2: EM Part I: Guess the labels 2), #iterations to, samples {(Vi, x),i-1,2, , N} for i=1,2, 4: 5: ,Nd? if 7: else 9: end if 10: end for 11 (EM Part II: Solve least squares ?(t+1) ? argmine 13: 14: end for output 3?)) The main algorithmic innovation of our paper is to develop a more principled initialization procedure. In practice, this allows for faster convergence, and with fewer samples, to the true ??, ?: Additionally, it allows us to establish global guarantees for EM, when EM is started from here. We now describe this initialization. 2.1 Initialization Our initialization procedure is based on the positive semidefinite matrix 1-1 where & represents the outer product of two vectors. The main idea is that M is an unbiased estimator of a matrix whose top two eigenvectors span the same space spanned by the true ?*,dg. We now present the idea, and then formally describe the procedure. Idea: The expected value of M is given by where Pi, P2 are the fractions of observations of ?,dg respectively, and the matrices An i = 1, 2, are given by where the expectation is over the random vector x, which in our setting is uniform normal. It is not hard to see that this matrix evaluates to Algorithm 1 EM (noiseless case) input initial 0), i for t-to. . . . , to -1 do 2: EM Part I: Guess the labels 2), #iterations to, samples {(Vi, x),i-1,2, , N} for i=1,2, 4: 5: ,Nd? if 7: else 9: end if 10: end for 11 (EM Part II: Solve least squares ?(t+1) ? argmine 13: 14: end for output 3?)) The main algorithmic innovation of our paper is to develop a more principled initialization procedure. In practice, this allows for faster convergence, and with fewer samples, to the true ??, ?: Additionally, it allows us to establish global guarantees for EM, when EM is started from here. We now describe this initialization. 2.1 Initialization Our initialization procedure is based on the positive semidefinite matrix 1-1 where & represents the outer product of two vectors. The main idea is that M is an unbiased estimator of a matrix whose top two eigenvectors span the same space spanned by the true ?*,dg. We now present the idea, and then formally describe the procedure. Idea: The expected value of M is given by where Pi, P2 are the fractions of observations of ?,dg respectively, and the matrices An i = 1, 2, are given by where the expectation is over the random vector x, which in our setting is uniform normal. It is not hard to see that this matrix evaluates to

Step by Step Solution

There are 3 Steps involved in it

Get step-by-step solutions from verified subject matter experts