Question: In this question you will implement a Naive Bayes classifier for a text classification problem. You will be given a collection of text articles,

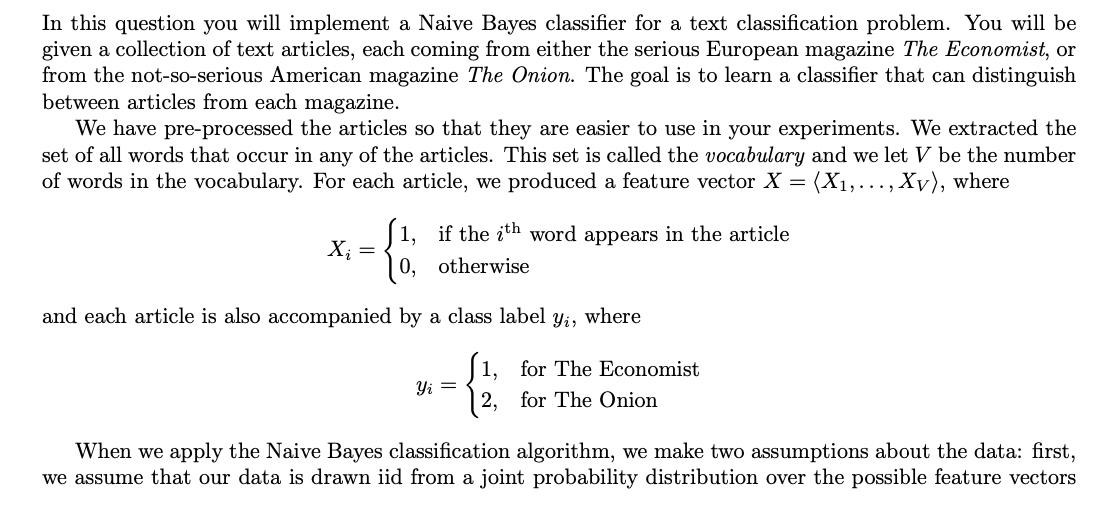

In this question you will implement a Naive Bayes classifier for a text classification problem. You will be given a collection of text articles, each coming from either the serious European magazine The Economist, or from the not-so-serious American magazine The Onion. The goal is to learn a classifier that can distinguish between articles from each magazine. We have pre-processed the articles so that they are easier to use in your experiments. We extracted the set of all words that occur in any of the articles. This set is called the vocabulary and we let V be the number of words in the vocabulary. For each article, we produced a feature vector X = (X,..., Xv), where if the ith word appears in the article otherwise X = 0, and each article is also accompanied by a class label yi, where 1, for The Economist 2, for The Onion Yi = When we apply the Naive Bayes classification algorithm, we make two assumptions about the data: first, we assume that our data is drawn iid from a joint probability distribution over the possible feature vectors X and the corresponding class labels Y; second, we assume for each pair of features X, and X, with i j that X, is conditionally independent of X, given the class label Y (this is the Naive Bayes assumption). Under these assumptions, a natural classification rule is as follows: Given a new input X, predict the most probable class label given X. Formally, Using Bayes Rule and the Naive Bayes assumption, we can rewrite this classification rule as follows: P(X|Y = y)P(Y = y) P(X) (Bayes Rule) (Denominator does not depend on y) = argmax Y = argmax P(X|Y = y)P(Y = y) Y Y = argmax P(Y = y|X). Y = argmax P(X,..., Xv|Y = y)P(Y = y) Y = argmax Y V IP(Xw|Y: = y)) P(Y (Conditional independence). w=1 Of course, since we don't know the true joint distribution over feature vectors X and class labels Y, we need to estimate the probabilities P(X|Y = y) and P(Y = y) from the training data. For each word index w {1, ..., V} and class label y {1,2}, the distribution of X given Y = y is a Bernoulli distribution with parameter yw. In other words, there is some unknown number Oyw such that P(Y = y) P(X = 1|Y = y) = Oyw P(Xw = 0|Y = y) = 1 - 0yw. We believe that there is a non-zero (but maybe very small) probability that any word in the vocabulary can appear in an article from either The Onion or The Economist. To make sure that our estimated probabilities are always non-zero, we will impose a Beta (2,1) prior on yw and compute the MAP estimate from the training data. Similarly, the distribution of Y (when we consider it alone) is a Bernoulli distribution with parameter p. In other words, there is some unknown number p such that P(Y = 1) = p P(Y=2) = 1 p. In this case, since we have many examples of articles from both The Economist and The Onion, there is no risk of having zero-probability estimates, so we will instead use the MLE for the distribution of Y. Questions 1. What would be the MAP estimate of Oyw = P(Xw = 1|Y = y) with a Beta(2,1) prior distribution? 2. What would be the MLE estimate for the prior, p = P(Y = 1)? [14 points] [8 points] [4 points] 3. Given Oyu, what would be the value of P(XW|Y = y)? 4. How would you classify a test example? Write the classification rule equation for a new test sample Xtest using yw and p? [8 points] 5. How do you think the train and test error would compare? Explain any significant differences. Hint: You can try to implement Naive Bayes for this question using python libraries and by finding a similar dataset. [4 points] 6. If we have less training data for the same problem, does the prior have more or less impact on our classifier? Explain any possible difference between the train and test error in this question and when we used the whole data. [6 points]

Step by Step Solution

3.45 Rating (164 Votes )

There are 3 Steps involved in it

Get step-by-step solutions from verified subject matter experts