Question: Question 1 (30 points): Given a neural network with two layers: Layer 1 has weight matrix W1 and bias vector b1, and layer 2 has

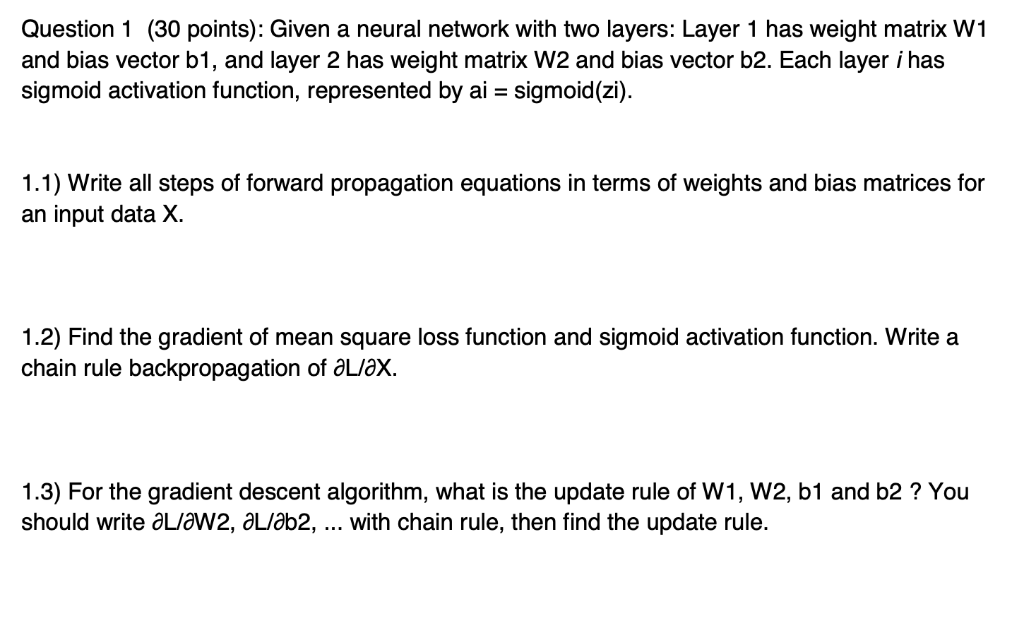

Question 1 (30 points): Given a neural network with two layers: Layer 1 has weight matrix W1 and bias vector b1, and layer 2 has weight matrix W2 and bias vector b2. Each layer i has sigmoid activation function, represented by ai - sigmoid(zi) 1.1) Write all steps of forward propagation equations in terms of weights and bias matrices for an input data X. 1.2) Find the gradient of mean square loss function and sigmoid activation function. Write a chain rule backpropagation of aLiax. 1.3) For the gradient descent algorithm, what is the update rule of W1, W2, b1 and b2? You should write aL/aW2, aL/ab2, with chain rule, then find the update rule. Question 1 (30 points): Given a neural network with two layers: Layer 1 has weight matrix W1 and bias vector b1, and layer 2 has weight matrix W2 and bias vector b2. Each layer i has sigmoid activation function, represented by ai - sigmoid(zi) 1.1) Write all steps of forward propagation equations in terms of weights and bias matrices for an input data X. 1.2) Find the gradient of mean square loss function and sigmoid activation function. Write a chain rule backpropagation of aLiax. 1.3) For the gradient descent algorithm, what is the update rule of W1, W2, b1 and b2? You should write aL/aW2, aL/ab2, with chain rule, then find the update rule

Step by Step Solution

There are 3 Steps involved in it

Get step-by-step solutions from verified subject matter experts