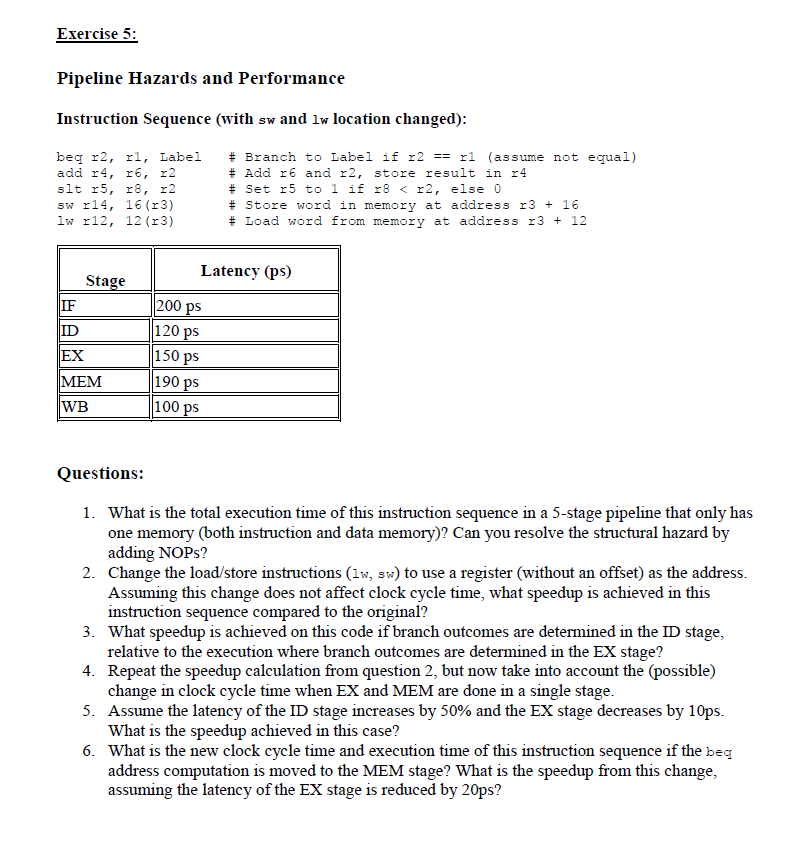

Question: Exercise 5 : Pipeline Hazards and Performance Instruction Sequence ( with sw and lw location changed ) : beq r 2 , r 1 ,

Exercise :

Pipeline Hazards and Performance

Instruction Sequence with sw and lw location changed:

beq r r Label # Branch to Label if r rassume not equal

add r r r # Add r and r store result in r

slt r r r # Set r to if r r else

sw rr # Store word in memory at address r

lw rr # Load word from memory at address r

Questions:

What is the total execution time of this instruction sequence in a stage pipeline that only has

one memory both instruction and data memory Can you resolve the structural hazard by

adding NOPs?

Change the loadstore instructions wsw to use a register without an offset as the address.

Assuming this change does not affect clock cycle time, what speedup is achieved in this

instruction sequence compared to the original?

What speedup is achieved on this code if branch outcomes are determined in the ID stage,

relative to the execution where branch outcomes are determined in the EX stage?

Repeat the speedup calculation from question but now take into account the possible

change in clock cycle time when EX and MEM are done in a single stage.

Assume the latency of the ID stage increases by and the EX stage decreases by ps

What is the speedup achieved in this case?

What is the new clock cycle time and execution time of this instruction sequence if the beq

address computation is moved to the MEM stage? What is the speedup from this change,

assuming the latency of the EX stage is reduced by ps

Given the sequence of instructions and the use of beq, indicate where NOPs should be inserted to avoid data hazards if any assuming no forwarding and a stage pipeline.

What is the clock cycle time in a pipelined processor and in a nonpipelined processor, using the given stage latencies? Consider the impact of each instruction on execution time and performance.

Exercise :

Cache and Memory Performance Evaluation

You are tasked with analyzing the performance of a CPU with the following configuration:

Address Space: bit addresses GB addressable memory

Cache Configuration:

o Cache Size: KB

o Cache Line Block Size: bytes

o Cache Associativity: way set associative

o Write Policy: Writeback

o Write Allocation: Writeallocate on write miss, load the block into the cache

o Replacement Policy: Least Recently Used LRU

Main Memory: GB of memory.

Part : Cache Organization

Determine the number of blocks in the cache.

Determine the number of sets in the cache.

Determine the number of bits used for the block offset, index, and tag.

Part : Cache Access Sequence

The CPU generates the following sequence of memory accesses in hexadecimal:

xxxxxxxxxx

xxxxxxxAxBxCxD

For each of the memory accesses, determine if it results in a cache hit or a cache miss, and simulate the cache replacement process using the LRU policy

Part : Virtual Memory Page Table Simulation

Assume that the CPU uses paging for virtual memory with the following configuration:

Page Size: KB

Virtual Address Space: bit, so the total virtual memory size is GB

Physical Address Space: bit, so the total physical memory size is also GB

Determine the number of pages in virtual memory and the number of frames in physical memory.

Simulate the translation of virtual addresses to physical addresses for each memory access.

Part : Performance Analysis

Calculate the cache hit ratio and miss penalty based on the cache access sequence.

Calculate the page fault rate and determine the effective memory access time EMAT considering a page fault penalty of cycles.

Exercise :

A CPU produces the following sequence of read addresses in hexadecimal:

A CC F A DC

The word size is bits.

Assume an word cache that is initially empty.

Implement a Least Recently Used LRU replacement policy.

For each of the following cache types, determine whether each address produces a hit or a miss:

Direct Mapping

Fully Associative

Twoway setassociative

Task:

Fill in the table with Address Hex Address Binary Direct Mapping, Fully Associative, and Way Set Associative.

Sketch the cache after processing all addresses and note replacements.

Compare the hit ratio for each cache type.

Discuss how changing the cache design to use words per block would affect the hitmiss behavior.

Explain the impact of miss penalty on the system performance.

Step by Step Solution

There are 3 Steps involved in it

1 Expert Approved Answer

Step: 1 Unlock

Question Has Been Solved by an Expert!

Get step-by-step solutions from verified subject matter experts

Step: 2 Unlock

Step: 3 Unlock