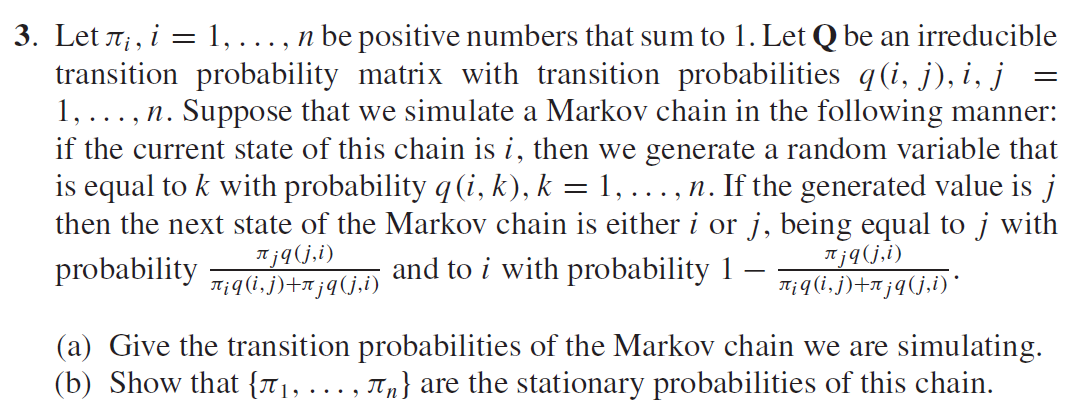

Question: Leti , i = 1, . . . , n be positive numbers that sum to 1. LetQbe an irreducible transition probability matrix with transition

Leti , i = 1, . . . , n be positive numbers that sum to 1. LetQbe an irreducible

transition probability matrix with transition probabilities q(i, j ), i, j =

1, . . . , n. Suppose that we simulate a Markov chain in the following manner:

if the current state of this chain is i , then we generate a random variable that

is equal to k with probability q(i, k), k = 1, . . . , n. If the generated value is j

then the next state of the Markov chain is either i or j , being equal to j with

probability j q( j,i )

i q(i, j )+ j q( j,i ) and to i with probability 1 j q( j,i )

i q(i, j )+ j q( j,i ) .

(a) Give the transition probabilities of the Markov chain we are simulating.

(b) Show that {1, . . . , n} are the stationary probabilities of this chain.

3. Let 31;, i = l, . . . , n be positive numbers that sum to 1. Let Q be an irreducible transition probability matrix with transition probabilities q(i, j ), i, j = l, . . . , 11. Suppose that we simulate a Markov chain in the following manner: if the current state of this chain is i, then we generate a random variable that is equal to k with probability q(i, k), k = 1, . . . , at. If the generated value is j then the next state of the Markov chain is either i or j, being equal to j with probability W W) 31' jIUJ) :r;q(i,j)+njq(j,i) and to; With probab111ty l Kt_q(1.7j) +Jrthjai)' (a) Give the transition probabilities of the Markov chain we are simulating. (b) Show that {31], . . . , 31\"} are the stationary probabilities of this chain

Step by Step Solution

There are 3 Steps involved in it

Get step-by-step solutions from verified subject matter experts