Question: In Exercise 10.50 we consider a simple linear model to predict Time in minutes for Atlanta commuters based on Distance in miles using the data

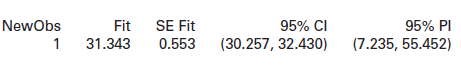

In Exercise 10.50 we consider a simple linear model to predict Time in minutes for Atlanta commuters based on Distance in miles using the data in CommuteAtlanta. For a 20 mile commute the predicted time is 31.34 minutes. Here is some output containing intervals for this prediction.

(a) Interpret the €˜€˜95% CI€ in the context of this data situation.

(b) In Exercise 10.50 we find that the residuals for this model are skewed to the right with some large positive outliers. This might cause some problems with a prediction interval that tries to capture this variability. Explain why the 95% prediction interval in the output is not very realistic.

Exercise 10.50

The data in CommuteAtlanta show information on both the commute distance (in miles) and time (in minutes) for a sample of 500 Atlanta commuters. Suppose that we want to build a model for predicting the commute time based on the distance.

95% CI 95% PI (7.235, 55.452) Fit NewObs SE Fit (30.257, 32.430) 0.553 31.343

Step by Step Solution

3.42 Rating (168 Votes )

There are 3 Steps involved in it

a We are 95 sure that the mean commute time for al... View full answer

Get step-by-step solutions from verified subject matter experts